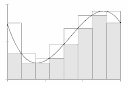

Now suppose \(p > 1\text{.}\) We proceed as we did for the harmonic series, but instead of showing that the sequence of partial sums is unbounded, we show that it is bounded. The terms of the series are positive, so the sequence of partial sums is monotone increasing and converges if it is bounded above. Let \(s_n\) denote the \(n\)th partial sum.

\begin{equation*}

\begin{aligned}

s_1 & = 1 , \\

s_3 & = \left( 1 \right) + \left( \frac{1}{2^p} + \frac{1}{3^p} \right) , \\

s_7 & = \left( 1 \right) + \left( \frac{1}{2^p} + \frac{1}{3^p} \right) +

\left( \frac{1}{4^p} + \frac{1}{5^p} + \frac{1}{6^p} + \frac{1}{7^p} \right) , \\

& ~~ \vdots \\

s_{2^k - 1} &=

1 +

\sum_{i=1}^{k-1}

\left(

\sum_{m=2^i}^{2^{i+1}-1} \frac{1}{m^p}

\right) .

\end{aligned}

\end{equation*}

Instead of estimating from below, we estimate from above. As \(p\) is positive, then \(2^p < 3^p\text{,}\) and hence \(\frac{1}{2^p} + \frac{1}{3^p} <

\frac{1}{2^p} + \frac{1}{2^p}\text{.}\) Similarly, \(\frac{1}{4^p} + \frac{1}{5^p} +

\frac{1}{6^p} + \frac{1}{7^p} <

\frac{1}{4^p} + \frac{1}{4^p} +

\frac{1}{4^p} + \frac{1}{4^p}\text{.}\) Therefore, for all \(k \geq 2\text{,}\)

\begin{equation*}

\begin{split}

s_{2^k-1}

& =

1+

\sum_{i=1}^{k-1}

\left(

\sum_{m=2^{i}}^{2^{i+1}-1} \frac{1}{m^p}

\right)

\\

& <

1+

\sum_{i=1}^{k-1}

\left(

\sum_{m=2^{i}}^{2^{i+1}-1} \frac{1}{{(2^i)}^p}

\right)

\\

& =

1+

\sum_{i=1}^{k-1}

\left(

\frac{2^i}{{(2^i)}^p}

\right)

\\

& =

1+

\sum_{i=1}^{k-1}

{\left(

\frac{1}{2^{p-1}}

\right)}^i .

\end{split}

\end{equation*}

As

\(p > 1\text{,}\) then

\(\frac{1}{2^{p-1}} < 1\text{.}\) Proposition 2.5.5 says that

\begin{equation*}

\sum_{i=1}^\infty

{\left(

\frac{1}{2^{p-1}}

\right)}^i

\end{equation*}

converges. Thus,

\begin{equation*}

s_{2^k-1} <

1+

\sum_{i=1}^{k-1}

{\left(

\frac{1}{2^{p-1}}

\right)}^i

\leq

1+

\sum_{i=1}^\infty

{\left(

\frac{1}{2^{p-1}}

\right)}^i .

\end{equation*}

For every \(n\) there is a \(k \geq 2\) such that \(n \leq 2^k-1\text{,}\) and as \(\{ s_n \}_{n=1}^\infty\) is a monotone sequence, \(s_n \leq s_{2^k-1}\text{.}\) So for all \(n\text{,}\)

\begin{equation*}

s_n <

1+

\sum_{i=1}^\infty

{\left(

\frac{1}{2^{p-1}}

\right)}^i

\end{equation*}

Thus the sequence of partial sums is bounded, and the series converges.